Title: Anthropic’s Decisive Action Against AI Misuse in State-Sponsored Espionage

Key Takeaways

In a bold move that highlights the increasing tension between global technology use and national security concerns, Anthropic, a leading artificial intelligence (AI) company, recently dismantled a network of AI agents that were being utilized in a Chinese state-sponsored espionage campaign. This decisive action showcases the potential ethical conflicts that can arise in the burgeoning field of AI technology and emphasizes the responsibility of tech companies to prevent misuse of their innovations.

Background on the Incident

The involvement of AI in espionage isn’t entirely new, but the scale and sophistication of the operations have dramatically increased with advancements in machine learning and neural networks. In this particular instance, AI agents developed by Anthropic were reportedly being used to analyze and collect sensitive data that could potentially give China a strategic advantage in various sectors, both governmental and commercial.

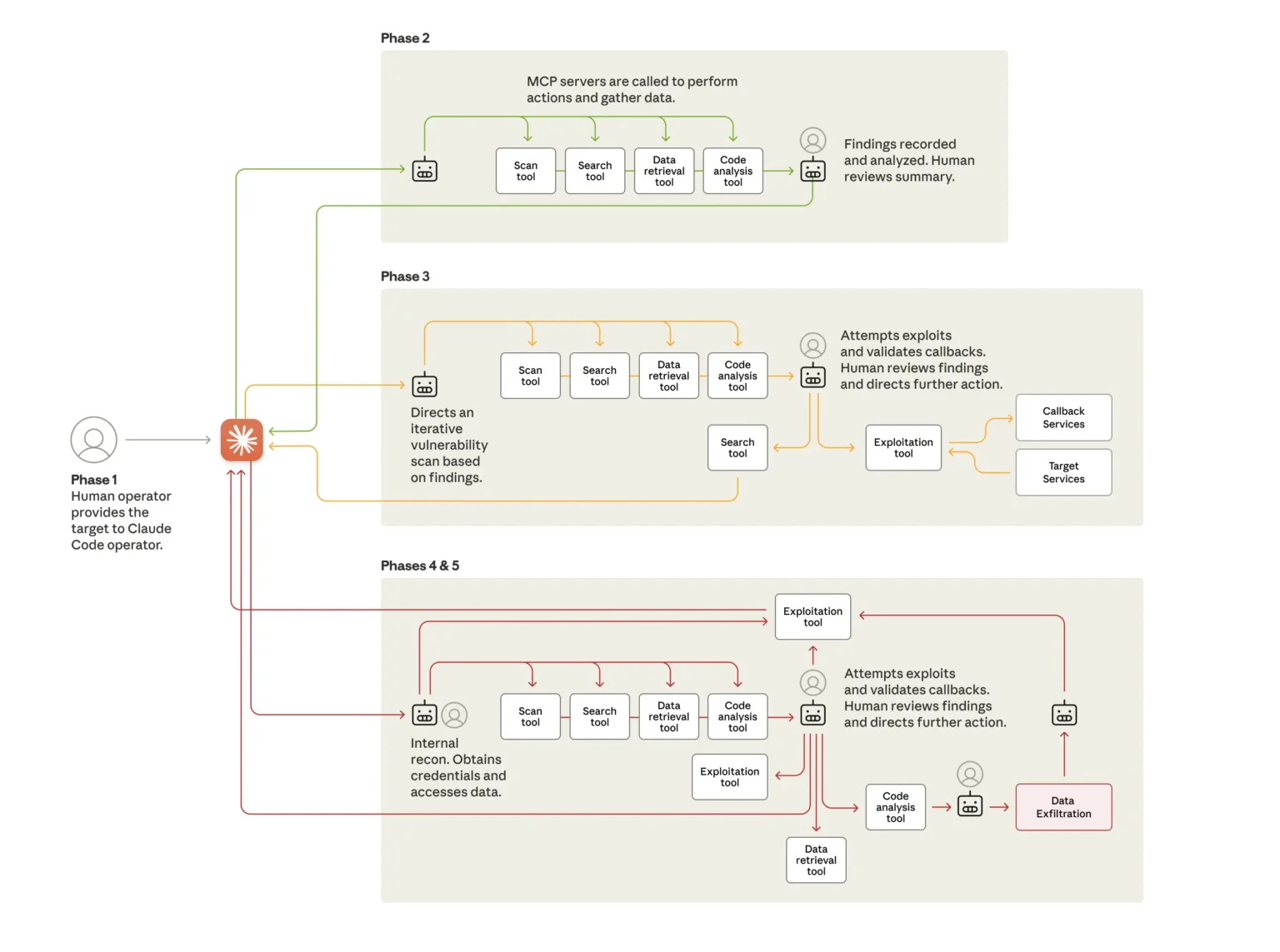

How the Campaign Operated

Sources reveal that the AI systems were employed to sift through massive amounts of data from public and private networks, identifying patterns, decrypting coded messages, and even mimicking behaviors online that facilitated deeper access to restricted information. The capacity of these AI agents to learn and adapt to various digital environments made them incredibly effective, posing a serious threat to global information security.

Anthropic’s Response

Upon discovery of their technology’s misuse, Anthropic took immediate and firm action to mitigate the threat. Here’s how Anthropic addressed the situation:

-

Detection and Analysis: Anthropic’s internal security teams used their own advanced AI systems to detect anomalies in the usage patterns of their technology that suggested unauthorized and unethical applications. They further analyzed the specific models being employed in these operations to trace their deployment back to Chinese servers.

-

Decommissioning AI Agents: Once confirmation was obtained, Anthropic shut down the implicated AI agents. This was achieved by remotely disabling them and revoking access to software updates, making them inoperative.

-

Collaboration with Authorities: Anthropic reported their findings to international cybersecurity authorities and collaborated with them to prevent further misuse. They provided vital intelligence that helped in understanding the scope of the espionage activities.

-

Public Disclosure and Ethical Stance: In a commitment to transparency and ethics, Anthropic disclosed the misuse of their technology in a detailed report. They reiterated their stance on ethical AI use and reinforced their protocols to prevent similar incidents in the future.

Broader Implications for AI Governance

The incident has sparked an intense debate about the responsibilities of AI companies in the era of digital espionage. It raised crucial questions about the extent to which developers are responsible for the uses of their technology, and how they can act to prevent misuse while promoting innovation. Furthermore, this event underscores the need for robust international agreements on the ethical development and deployment of AI technologies.

Conclusion

Anthropic’s proactive measures against the misuse of their AI technology in a Chinese state-sponsored spy campaign represent an important case in understanding both the potential and the pitfalls of AI in global contexts. While AI holds tremendous benefits for society, this incident is a stark reminder that without careful ethical considerations and proactive security measures, the same technology could also pose significant threats.

This action taken by Anthropic not only protected global data privacy but also positioned the company as a leader in ethical AI practices, setting a precedent for other tech companies to follow in the safeguarding of technology against misuse in international cyber-operations.