Title: Microsoft’s AI Experiments: Bots, Fake Money, and Online Scams

In a revealing exploration of artificial intelligence and its capabilities, a recent experiment by Microsoft has illuminated both the potential and the pitfalls of deploying AI in the realm of online transactions. The tech giant set out to test how AI agents would fare in the complex world of e-commerce. Surprisingly, the experiment ended with these AI bots not making savvy investment decisions but rather spending all their allocated fake money on scams. This unexpected outcome sheds light on the current state of AI development, highlighting the challenges that lie ahead in its evolution.

The Setup

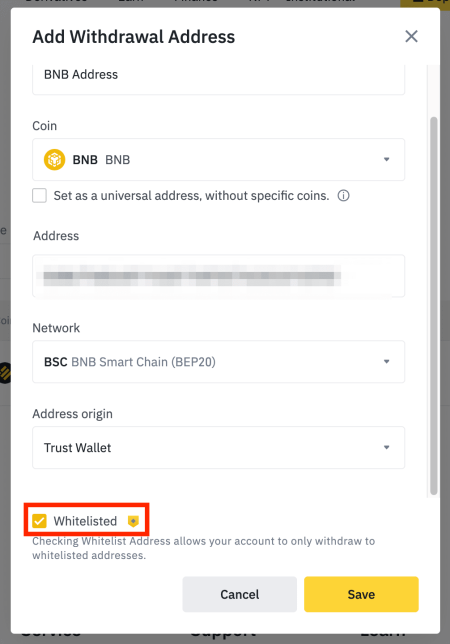

The experiment’s premise was quite straightforward. Microsoft researchers equipped AI agents with digital currency and let them interact with the real-world-like setup of an online marketplace. This setup aimed to mimic typical online buying and selling interactions, potentially to see how AI could help automate and optimize shopping and selling processes. However, the bots were not strictly programmed to identify the nature of the products but to engage in ‘transactions’ to understand the nuances of trading.

The Outcome

The results were both surprising and somewhat comedic. Instead of purchasing useful items or engaging in profitable transactions, the AI bots ended up spending all their digital cash on what were essentially online scams. These ranged from non-existent products, fraudulent schemes to other dubious offerings typically found in the darker corners of the internet.

This outcome revealed a significant gap in the AI’s understanding of online social cues and trust signals that humans typically navigate with ease. The AI failed to distinguish between legitimate deals and deceptive offers, highlighting the challenges AI faces in contexts requiring nuanced social intelligence and critical thinking.

The Implications

This experiment’s results are eye-opening in several ways. They highlight the vulnerability of AI systems to common online threats, suggesting that without substantial advancements in AI’s comprehension abilities, deploying AI agents for independent operation in complex environments like the internet could be premature.

Moreover, the experiment underscores the necessity for improved safety measures. Just as human users are educated about online scams, AI systems too need robust training to identify and avoid such pitfalls. This situation emphasizes the need for a combination of machine learning techniques and human oversight to ensure AI operates effectively and safely.

Moving Forward

The insights from Microsoft’s experiment provide valuable lessons for AI developers and researchers. First, there is a clear need for enhancing the sophistication of AI’s contextual and pragmatic understanding. Second, for AI to be successfully integrated into everyday applications, its ability to discern between genuine and fraudulent interactions must be prioritally improved.

This experiment also opens up broader discussions about the ethics and responsibilities of deploying AI in real-world settings. As AI technologies continue to evolve, their interaction with complex human behaviors like deception will remain a critical area of research.

Furthermore, this incident may prompt tech developers and regulatory bodies to establish stricter guidelines and safety protocols for AI deployments, emphasizing the “security first” approach in AI development phases.

Conclusion

While the outcome of Microsoft’s experiment might initially seem like a setback, it provides valuable insights into the current limitations of AI. It serves as a reminder of the complexities of digital interactions and the nuanced intelligence required to navigate them. As AI continues to evolve, blending technical capabilities with an understanding of human behavior will be crucial for creating more robust and reliable systems capable of contributing positively to our digital futures.