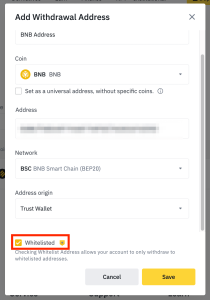

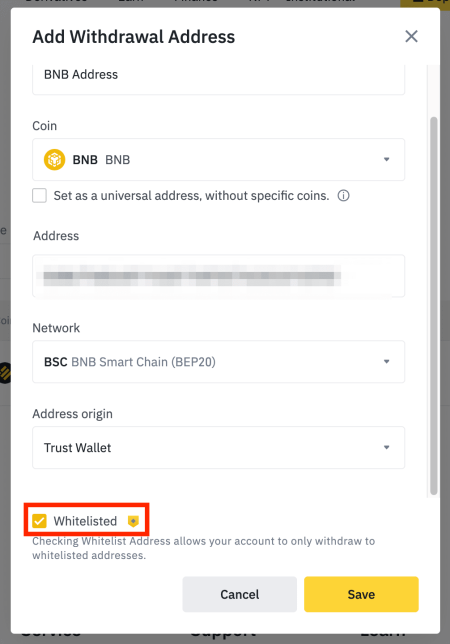

NVIDIA’s NVL72 systems are changing the landscape of large-scale MoE model deployment by implementing Wide Expert Parallelism to enhance performance and lower costs. This advancement allows more efficient utilization of resources during model training and inference. By leveraging Wide Expert Parallelism, NVIDIA aims to optimize the scaling of mixture of experts (MoE) models, which are increasingly used in artificial intelligence applications. The NVL72 systems provide a framework that supports the simultaneous operation of multiple experts, thereby improving overall model efficiency. This innovation is expected to significantly benefit organizations looking to deploy large-scale models more effectively.

NVIDIA NVL72 Revolutionizes MoE Model Scaling with Expert Parallelism

Previous ArticleGala Games Launches Halloween-Themed ‘Crimson Nights’ Event

Related Posts

Add A Comment